News | July 26, 2017

The Dark Side of the Crater

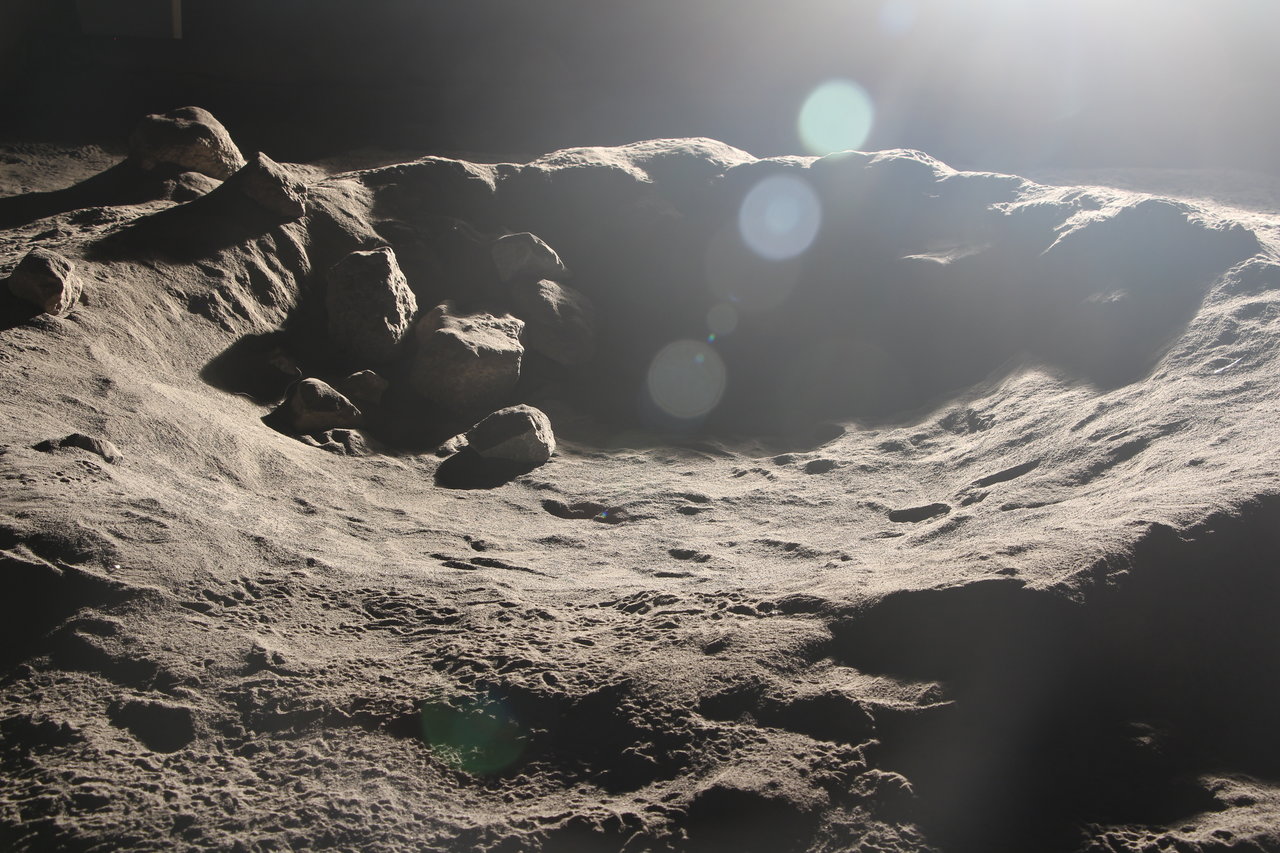

The Lunar Lab testbed at NASA Ames Research Center – a 12-foot-square sandbox containing eight tons of JSC-1A, a human-made lunar soil simulant. Credits: NASA/Uland Wong

How Light Looks Different on the Moon and What NASA Is Doing About It

Things look different on the Moon. Literally.

Because the Moon isn't big enough to hold a significant atmosphere, there is no air and there are no particles in the air to reflect and scatter sunlight. On Earth, shadows in otherwise bright environments are dimly lit with indirect light from these tiny reflections. That lighting provides enough detail that we get an idea of shapes, holes and other features that could be obstacles to someone – or some robot – trying to maneuver in shadow.

"What you get on the Moon are dark shadows and very bright regions that are directly illuminated by the Sun – the Italian painters in the Baroque period called it chiaroscuro – alternating light and dark," said Uland Wong, a computer scientist at NASA's Ames Research Center in Silicon Valley. "It's very difficult to be able to perceive anything for a robot or even a human that needs to analyze these visuals, because cameras don't have the sensitivity to be able to see the details that you need to detect a rock or a crater."

What you get on the Moon are dark shadows and very bright regions that are directly illuminated by the Sun – the Italian painters in the Baroque period called it chiaroscuro – alternating light and dark.

In addition, the dust itself covering the Moon is otherworldly. The way light reflects on the jagged shape of individual grains, along with the uniformity of color, means it looks different if it's lit from different directions. It loses texture at different lighting angles.

Some of these visual challenges are evident in Apollo mission surface images, but the early lunar missions mostly waited until lunar “afternoon” so astronauts could safely explore the surface in well-lit conditions.

Future lunar rovers may target unexplored polar regions of the Moon to drill for water ice and other volatiles that are essential, but heavy, to take on human exploration missions. At the Moon’s poles, the Sun is always near the horizon and long shadows hide many potential dangers in terrain like rocks and craters. Pure darkness is a challenge for robots that need to use visual sensors to safely explore the surface.

Wong and his team in Ames' Intelligent Robotics Group are tackling this by gathering real data from simulated lunar soil and lighting.

"We're building these analog environments here and lighting them like they would look on the Moon with solar simulators, in order to create these sorts of appearance conditions," said Wong. "We use a lot of 3-dimensional imaging techniques, and use sensors to create algorithms, which will both help the robot safeguard itself in these environments, and let us train people to interpret it correctly and command a robot where to go."

Click here to listen to an audio version of this feature on the "NASA in Silicon Valley" podcast.